PUBLISHED ON

14 August 2020

The Voicebot Mechanic

About the Product

The Console serves as an all-encompassing solution for creating conversational flows, monitoring, and collecting data to enhance the performance of voice bots. Essentially, it is a web-based tool utilized by Vernacular.ai's team. Given that even a minor adjustment in the Voice bots ML model can yield multiple outcomes, testing becomes a crucial practice to ensure the integrity and functionality of the system.

What are Voicebots?

Voice bots are AI-powered software that enables callers to navigate interactive voice response (IVR) systems using their voice, typically employing natural language. This eliminates the need for callers to listen to menus and press corresponding numbers on their keypads. Instead, they engage with the IVR in a simplified simulation resembling a conversation with a live operator.

At Vernacular, the call flow and specific conversation states were tested by placing calls to a number assigned to a particular voice bot. A single caller conducted approximately 80–100 calls per day, with an average duration of around 58 seconds per call. Any faulty calls were identified and reported through another interface known as Call Reporting (which will be discussed in a separate article).

Problems faced by the users

The existing process was tedious and time-consuming. Regular testing of Voice Bots was imperative due to the potential for significant repercussions from even minor changes in the ML model (Butterfly effect). To facilitate testing, a single caller was required to make approximately 80–100 calls per day. Urgent simplification was needed, particularly with the frequent development of new conversational flows for various clients (organizations). The efficiency of testing and reporting faulty calls was on the decline.

The major problems identified included:

High call frequency: Testing a specific conversation state necessitated repetitive calling and performing the same steps to reach and check that state.

Long call duration (Average 120 seconds).

Sneak Peak into the Design process

Setting Projects Goals

Remove telephony dependency.

Faster testing and data generation.

And reporting all the faulty conversation turns during the call.

Proposed Solution

Develop a web-based service within Console designed to simulate the calling experience. This service aims to enhance flexibility in testing call flow and specific conversational turns by allowing repetitive testing within a single call.

Defining Job Stories

Given that the product was exclusively for internal use, our users were readily accessible.

We opted for Job Stories instead of creating User Personas and User Stories. This decision was driven by the need for a quicker approach and a desire to delve deeper into Jobs Theory. Integrating this approach into a sprint allowed us to test and evaluate the pros and cons effectively.

When I make a call, I want to loop into one conversational state so I can test it repeatedly.

When I make a call, I want to loop into one conversational state so I can report the incorrect intents predicted by the ASR(Automatic Speech Recognization).

When I’ve answered the voice bot, I want to know the predicted intent so I can report that conversational term there and then if it is faulty.

When I make a call, I want to select the language and version for the voice bot so that I can conduct more tests.

These were the 4 Job Stories we listed to ideate the features for the MVP version.

Important Decision

We opted against using text input to communicate with the voice bot on the interface, as we aimed to avoid transitioning into a chatbot, which was not our intention. Instead, we chose to take voice as the input from the user and read the bot's textual output. Although the primary output of the bot was textual, there was an option to play the prompt as well.

The reasons behind this decision were:

We wanted to replicate the on-call experience using an interface.

Enabling the text input option might have led us to assume that the Automatic Speech Recognition (ASR) was functioning perfectly, potentially overlooking areas for improvement.

This decision proved instrumental in creating a new and faster user experience, allowing users to speak their queries and read the bot's prompt. Notably, it contributed to reducing the average call duration from 120 seconds to 90 seconds per call.

User Flow

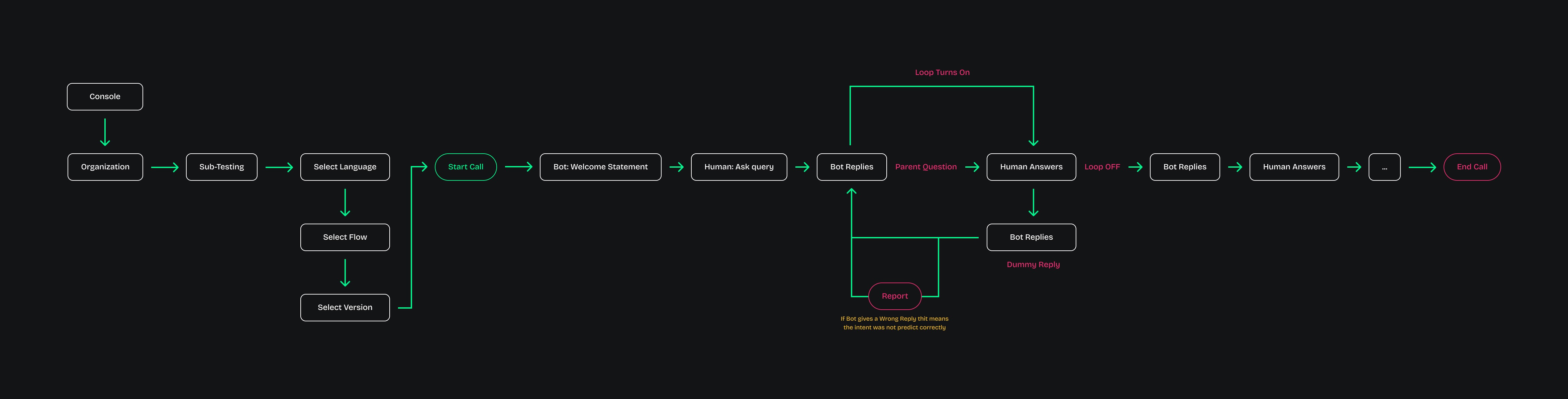

The flow diagram below illustrates the user's journey during a call on the interface:

Shipping the MVP

Acknowledging time constraints and code limitations, the UI received less emphasis, although it certainly requires improvement. Despite this, the Sub-Testing service has become functional. The MVP version has been shipped, inviting users to engage, test, and provide feedback. This iterative process aims to further reduce friction in the overall experience.

Impact the changes created

Following the MVP version demo of the Sub-Testing interface, we received an excellent response. Team members who regularly tested voice bots began using this newly functional service within Console immediately.

Key statistics collected include:

A reduction in the average call time from 58 seconds to 33 seconds.

A saving of up to 1 hour per day for voice bot testers, enabling them to utilize this additional time more productively.

Significant improvement in the rate of data generation and collection.

Final Thoughts & Learnings

We gained valuable hands-on experience using Job Stories. As a UX designer, the goal is always to deliver the best user experience, but in certain situations, prioritizing functionality and getting the product running takes precedence. This is an inherent aspect of Product Design.

Designing for an AI-based startup introduced a new set of challenges, particularly in dealing with the unpredictability of the voice bot's predictions, where outcomes varied even for the same input.

Collaborating closely with Amit Manchanda (Associate Product Manager at Vernacular.ai) proved to be an excellent experience. It allowed me to enhance my understanding of the broad spectrum of design, consider limitations, and pose the right questions to ensure a comprehensive approach.